Using the “INDEX, FOLLOW” Code as the Default Robots Tag

Explanation & Implementation Guide

Explanation

The robots meta tag is essential for instructing search engine crawlers on how to handle a webpage. When no explicit robots tag is present, crawlers typically assume “INDEX, FOLLOW,” which allows them to index the page and follow its links. While this default behavior might work in most cases, explicitly defining the robots meta tag ensures consistency and prevents potential SEO issues. Without clear directives, search engines might mishandle critical pages, fail to index important content, or waste crawl budget on unimportant or duplicate pages.

Implementation Guide

Evaluate Existing Robots Meta Tags:

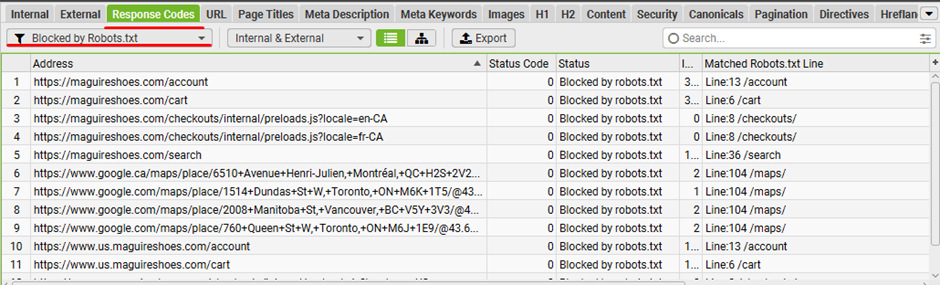

- Use tools like Screaming Frog SEO Spider to crawl your site and analyze current robots meta tags.

- Identify pages where the robots meta tag is missing or improperly defined.

Identify Critical Pages:

- Pinpoint important pages, such as:

- Product pages

- Category pages

- Blog posts and landing pages

- Ensure these pages have clearly defined robots meta tags to guide search engine crawlers effectively.

Set a Default Robots Meta Tag:

- Decide on the default directive, such as “INDEX, FOLLOW,” for pages without specific instructions.

- Choose alternative directives (e.g., “NOINDEX, NOFOLLOW”) based on your SEO goals for specific sections

Add Robots Meta Tag to Shopify Templates:

- Access your Shopify theme templates through the admin dashboard.

- Insert the default robots meta tag in the <head> section of your theme’s templates. html

Copy code: <meta name=”robots” content=”index, follow”> - This ensures pages without explicitly defined robots meta tags default to your chosen directive.

Fixing the Issue

Use Specific Directives for Critical Pages:

Adjust robots meta tags for specific needs:

- For pages that shouldn’t appear in search results: <meta name=”robots” content=”noindex”>

- For pages where links shouldn’t be followed: <meta name=”robots” content=”nofollow”>

Apply Meta Robots Tags on Individual Pages:

Add meta robots tags directly to individual pages as required, tailoring them to meet unique SEO requirements.

Monitor and Update Regularly:

- Regularly assess your site’s performance in search results using tools like Google Search Console.

- Adjust your robots meta tag strategy in response to:

- Content updates

- Changes in SEO goals

- Evolving search engine guidelines

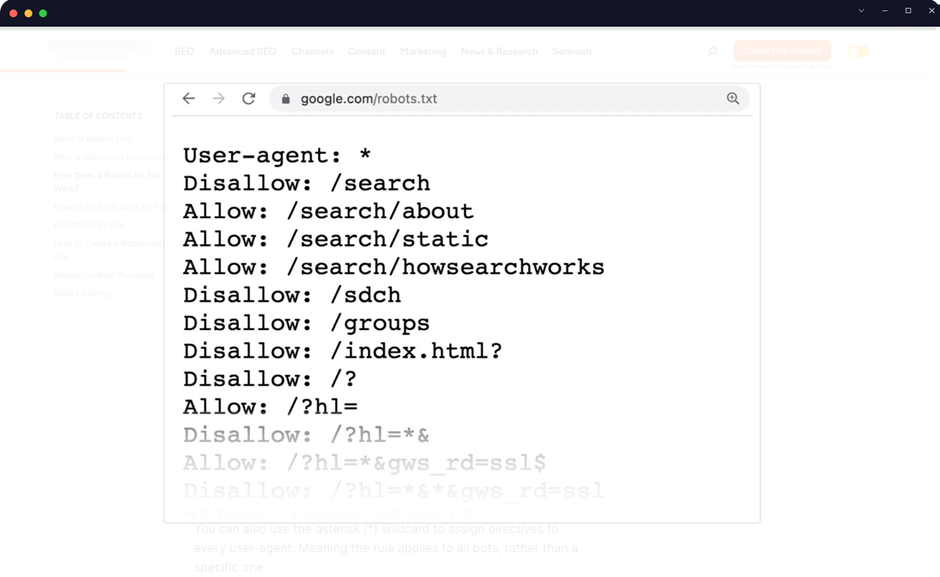

Leverage Robots.txt for Advanced Control:

- Use the robots.txt file to block crawlers from accessing specific directories or files: plaintext Copy code User-agent: * Disallow: /private/.

- Combine robots.txt with meta robots tags for more precise control over crawling and indexing.

Test and Validate:

- Test your robots directives with Google Search Console’s URL Inspection Tool to confirm how Googlebot handles your pages.

- Address any discrepancies or indexing issues promptly.

Document and Share the Strategy:

- Maintain documentation of your robots meta tag strategy for internal use.

- Share these directives with your team to ensure consistent implementation across your Shopify store.

No comments to show.

Leave a Reply