Robots.txt Review

Explanation & Implementation Guide

Explanation

The robots.txt file is used to guide search engine crawlers about which pages or sections of your website they are allowed to crawl or not. If configured improperly, the robots.txt file can inadvertently block search engines from accessing crucial content, leading to poor SEO performance due to pages not being indexed or displayed in search results.

Implementation Guide

Identifying Robots.txt Issues:

Check Robots.txt File: Visit yourstore.com/robots.txt to access your Shopify store’s robots.txt file.

Examine it to ensure no critical directories or pages are being disallowed from search engine crawlers.

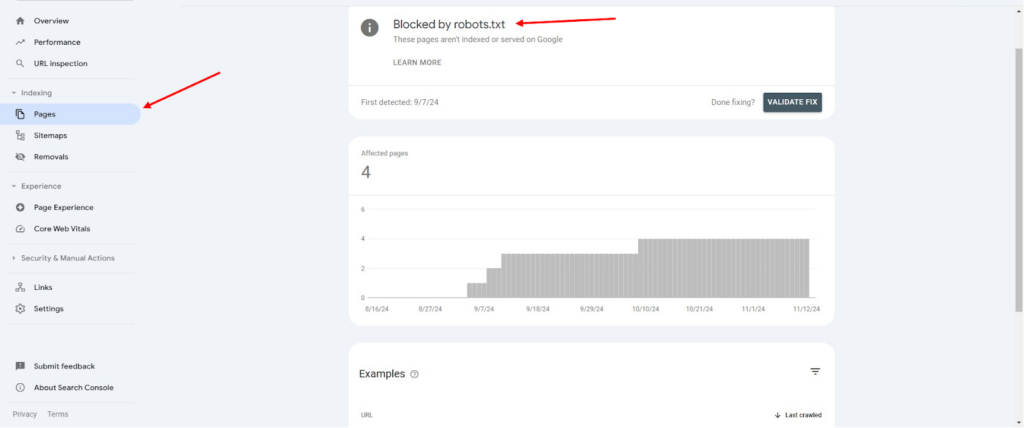

Use Google Search Console:

- Log into Google Search Console.

- Under the Coverage section, look for any pages excluded by the robots.txt file.

- Use the robots.txt Tester tool in Legacy tools and reports to detect any issues or warnings.

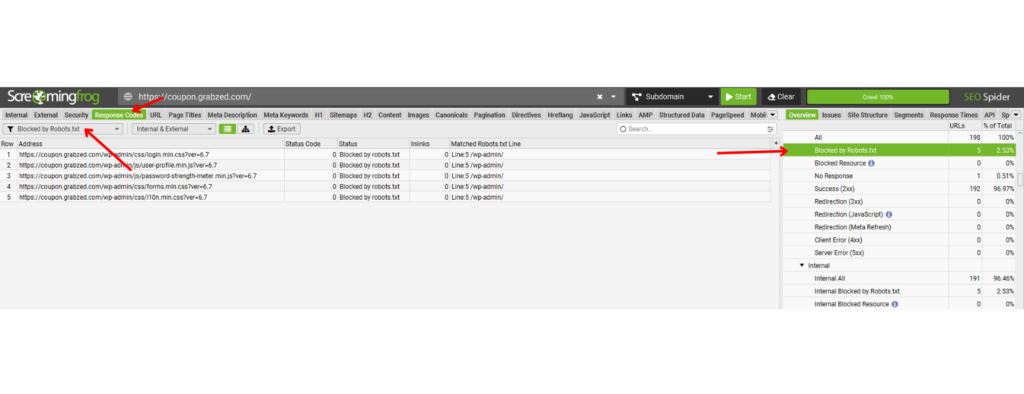

Use Screaming Frog:

- Download and open Screaming Frog SEO Spider.

- Enter your store’s URL and begin crawling.

- Check the Response Codes tab and filter by Blocked by Robots.txt to identify any pages blocked by the file.

Fixing the Issue

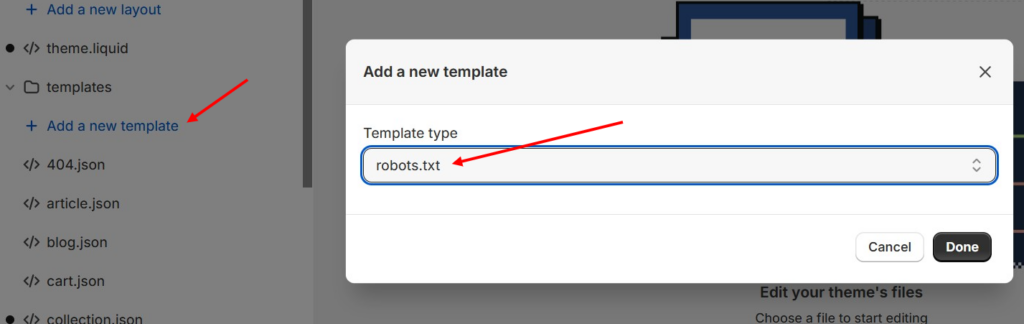

Editing Robots.txt in Shopify:

- Shopify generates a default robots.txt file, and direct editing options may be limited. However, you can manage indexing preferences from within your Shopify admin by determining which pages should be visible to search engines.

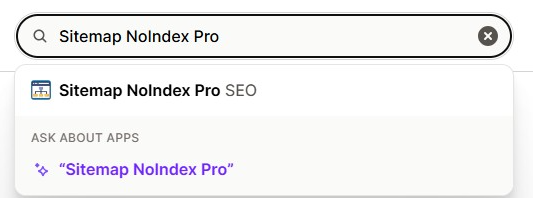

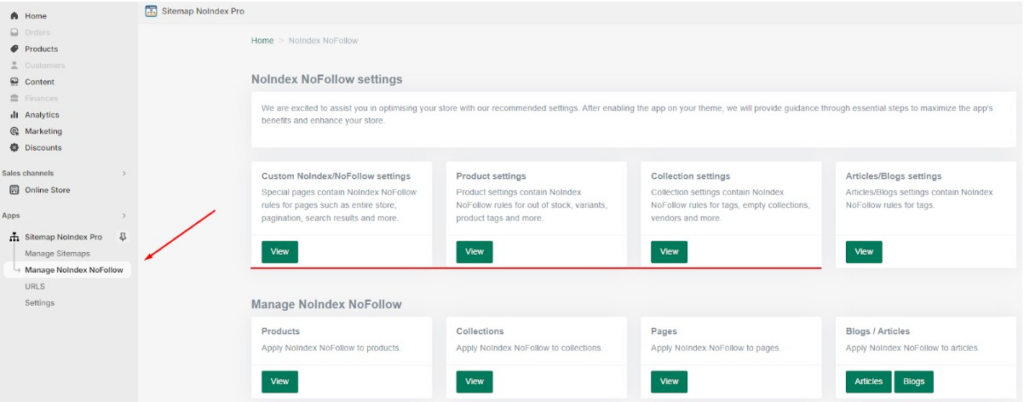

- For more advanced customizations, Shopify Plus users may have additional options. Alternatively, you can utilize apps, edit theme files, or use meta tags (e.g., noindex) for more granular control over content visibility.

Updating Disallow Rules:

- If specific content is being incorrectly blocked, locate and update the corresponding Disallow rules in the robots.txt file. Modify or remove these rules to allow search engines to crawl the necessary pages.

Re-submitting and Testing:

- After making changes, visit yourstore.com/robots.txt again to check the updated file.

- Use Google Search Console’s robots.txt Tester to verify the updates and ensure no errors remain.

- Finally, resubmit your sitemap in Google Search Console to prompt Google to recrawl your site.

No comments to show.

Leave a Reply